How i fixed the PHP processes killing my server

The website you’re currently visiting is hosted on a VPS (virtual private server) running Ubuntu 16.04 with Nginx as a web server and PHP for most applications.

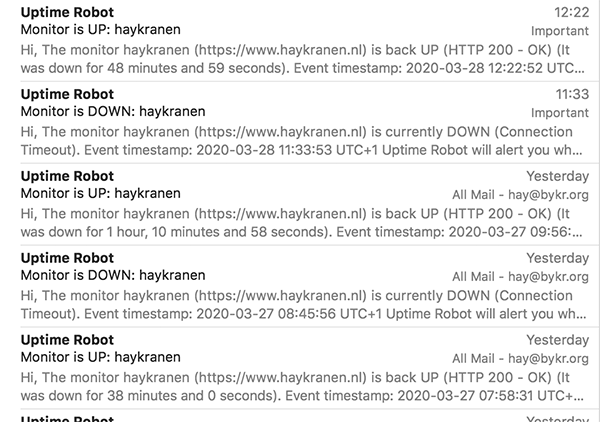

Unfortunately it wasn’t running that well. I use an excellent free service called Uptime Robot that sends me e-mail if the website is down for more than five minutes. And my inbox looked like this for the past few days.

What you see here are warnings about the fact that Uptime Robot can’t load this website. Maybe i should do something about that.

However, i’m not really a sysadmin. I like running my own server because that allows me lots of freedom in what technologies i can use to run my projects. But i don’t like fixing problems like this. So what i usually do is ssh to my server and run:

sudo rebootWhich tends to fix most problems. This problem kept returning though.

So, when i got another mail indicating the server was down i logged in, didn’t reboot it and checked a couple of vital things. The first thing i did was running:

uptimeApart from giving you stats about how long your server has been running, it also gives you some other statistics.

14:36:46 up 1:33, 2 users, load average: 0.08, 0.02, 0.01The three numbers at the end are the load averages for the past 1, 5 and 15 minutes. This gives an indication of how hard your CPU is working. If you have a single CPU in your machine these values should not exceed 0.7 or so (because a 1 indicates maximum load). If you have more than one CPU you can multiply the value by the number of CPUs in your machine.

But as you can see, these values are pretty low. So the problem wasn’t that my CPU was overloaded.

The next step was running htop (a more user friendly version of the classic top command). This showed me something was eating up all my RAM. What was going on?

Because this server is used mainly for serving websites i checked the logs for my web server, nginx.

sudo vim /var/log/nginx/error.logAnd sure enough, around the same time i received all the e-mails about my site going down the log was filled with messages like this:

2020/03/28 12:05:53 [error] 998#998: *128599 open() "/usr/share/nginx/www/50x.html" failed (2: No such file or directory), client: x.x.x.x, server: www.haykranen.nl, request: "POST /xmlrpc.php HTTP/1.1", upstream: "fastcgi://unix:/run/php/php7.0-fpm.sock", host: "www.haykranen.nl"I don’t have a HTTP 500 error page defined, that’s the reason for the error messages. But the message also indicates something is causing these errors. Because it also mentions PHP i checked my PHP logs:

sudo vim /var/log/php7.0-fpm.logAnd sure enough, there i found the culprit:

[28-Mar-2020 11:31:03] WARNING: [pool www] server reached pm.max_children setting (50), consider raising itPHP spins up a new process every time a script gets executed. However, a new process takes up a block of memory. How much depends on your settings, but in my case it was around 64MB. The pm.max_children setting is crucial here, because it determines the maximum number of processes. Given that my server only has 2GB of memory, 50 PHP processes of roughly 64MB would take 3.2GB of RAM. No wonder my server had some issues!

To make sure there were actually 50 processes running i executed this command:

systemctl status php7.0-fpmAnd sure enough, it showed me the 50 processes.

Now, i could just lower the pm.max_children setting (or use some other setting to determine that number automatically). However, the more important question is: why is PHP running so many processes?

There are many ways to check that, but one simple way is by using the lsof command that will list all open files.

lsofThis will produce a gigantic list of all open files on the system, so you need a little filtering. Let’s use this together with the grep command.

lsof | grep phpThis still gives loads of results (over 4.000 lines in my case). So i saved it to a file

lsof | grep php > php.txtAnd then copied it over to my local machine to inspect the contents of the file.

scp myserver:php.txt .I did some searching through the file and finally found something interesting. The php files that were run and located in my /var/www/ folder (that contains all of my websites) all referred to the same website: sum.bykr.org.

The website at that URL was an old project that had been running for years. A hackathon project, it was basically a dynamic mirror of Wikipedia with a different skin and some added features. The thing is, because pages were generated dynamically it could render any page on the English Wikipedia. And not just that: it also contained links to every language edition of Wikipedia. Meaning it potentially could generate millions of pages.

And sure enough, when i took a look at the log files of this old website, it was filled with endless requests from search engine spiders trying to index the complete Wikipedia in 200 languages.

So in the end, the fix for the problems with my server was very simple: just disable this old website. I put an ‘out of order’ page on sum.bykr.org, so i could finally stop doing maintenance work.

And i wrote a blog about it.